Labeling data for Autonomous Driving is not just very tedious and time-consuming: it is actually one of those times where annotating data the right way is fundamentally a matter of life and death.

Luckily, a tremendous quantity of Autonomous Driving data has been annotated over the years, and some best practices have eventually emerged over time. The one thing that keeps prevailing over this know-how inherited from other people and teams though, is just good old common sense. Machine Perception, as the term indicates, is all about making an autonomous system perceive and interpret the world as a human would, and unsurprisingly, the consequences from a mistake made by an autonomous vehicle is not dramatically different from the consequences from the same mistake made by a human driver at the wheel. Therefore, in a way, the human annotator needs to think as if the image he/she is working on were really the scene he/she was experiencing while driving a vehicle.

With all that being said, a few additional challenges arise when annotating an autonomous dataset.

- First, ML models do not have an understanding of the context which would otherwise allow them to extrapolate from a situation. Hence, the annotator needs to infuse the context in the way he/she labels the data.

- Second, as supervised models, they also do not naturally have the ability to self-correct, so once the model learns a pattern to recognize an object, this “knowledge” (whether faulty or not) remains embedded into the model’s parameters.

Generally speaking, for those two reasons, mistakes made when annotating are often less forgivable than they would be in real life, and the annotator needs to think about everything that could potentially go wrong. This also means that it is the annotator’s responsibility to try and extrapolate the potential effect of a specific annotation mistake on the model. Easier said than done knowing that Deep Learning models (which are predominantly used in Computer Vision systems) are black box models which make them hardly interpretable.

Intuitively reverse-engineering a perception model, discovering which mistakes are “forgivable” and which ones can prove lethal on the road, as well as deriving best practices for annotation is all we will focus on in the remainder of this article.

Consequences of Failing to Annotate an Object

Ghosts, Reflections, Shadows

In the context of data labeling, the term ghost generally refers to secondary images of an object produced by the environment. Ghosts can be clear – like in the case of the reflection of an object in a mirror -, or can be more faint, such as when the object reflects on a wet road after the rain.

An important consideration when annotating Autonomous Driving data is, of course, whether or not ghosts should be annotated as actual objects. And as discussed above, answering this question goes through evaluating the risks of considering the ghost image one way or another. What would happen to the model if the reflection on the image below were not to be marked as cars? Clearly, if those “ghosts” were missed at inference time, it would not represent a risk; if anything, it would avoid that the autonomous vehicle stopped unexpectedly, for no reason. However, we also have to consider whether not marking them could reduce the recall on the car objects at inference.

This is where understanding how Deep Learning models analyze images helps a lot. It turns out that CNNs actually identify objects through texture rather than shape. Therefore, it is very unlikely that not including those wet reflections as vehicles in the training data would have a negative incidence on the model’s recall.

This, however, also means that it might be safer to annotate clearer reflections which do not distort the original object (such as the reflection of an object in a mirror), knowing that for the model, such reflections would be indistinguishable from the original anyways and that failing to include them could dramatically impact recall.

To Infer or Not to Infer, That is the Question

We might not always be fully aware of it, but our brains are constantly inferring from the situation, based on our own understanding of the world.

Screenshots and videos of traffic on streets and highways are among the most common scenes for us, and therefore, we are particularly prone to extrapolate from the context. A tiny green dot far away in the background at night? It’s just got to be a green light – what else could it be? Two horizontally aligned dots of red or white in the fog? Those are cars for sure!

And yet, when annotating data, should we really mark those examples as what we assume them to be?

Again, let’s discuss the risk of not annotating the dots below as cars. Missing a car could be fatal to the occupants of the autonomous vehicle. Hence, naturally, it is safer to mark both sets of lights as a car. That being said, let’s note that such a configuration can only happen when the vehicles are in a significant distance. If they are really far away, failing to recognize them as cars might not have such a dramatic impact after all. One might want, then, to use their best judgment to decide whether or not to annotate those unidentified objects based, for example, on the distance between the two dots.

The problem of the lights in the fog or in the night is particularly common when annotating Autonomous Driving data, but there are actually countless more situations where labeling the data might require extrapolating from the context; and each and every single time, it is important to analyze the consequences of including that context.

Take the example of a snowed-in car. We humans know that it is a car; unless it has been fed explicitly similar examples during training, a model would not. In that specific situation, marking the “snowcar” as a car could actually cause issues to the model which might come to expect a snowcap as a typical feature of a car; this could mean that cars without snow on their roofs might simply be interpreted as not being cars. It is increasingly likely that the model would struggle to categorize such an object as a special case of a car because snow has an entirely different texture than that of typical cars. If anything, cars with snow on their rooftops should be considered as a different class. It is reasonable though to assume that cars with a thick layer of snow will only be found on the sideroad, and hence do not need to be detected as cars when the vehicle is operating.

When is Small too Small?

Should all objects be annotated regardless of their size? Most labeling guidelines do not specify a minimum size under which it is not necessary to annotate the object. This is often problematic when the density of objects is really high and it is not possible for a human being to annotate every single one of those objects. Not including a minimum size might not only make the labeling process slower and trickier; it might actually lead to a lower quality as the annotators might get discouraged and end up only partially annotating an image.

Here is the good news: in the context of Autonomous Driving, the smallest objects are typically the ones that are the furthest away, and therefore, missing them is rarely an immediate issue. It is often a better idea to ensure that the closer objects are properly annotated rather than enforcing that every single one of them is marked.

The Case of Embedded Objects

Should the passengers on a bus be annotated as people?

Missing passengers at inference time would have no consequences whatsoever (assuming the “container” is detected properly) as the autonomous vehicle would already be configured to stop when coming across the bus. The remaining question though is whether or not failing to annotate the people on the bus could bias the model. In order to answer that question, it pays off to go back to the “ghost” considerations; if the passengers are hidden behind a thick layer of glass and hidden by reflections or distorted, then it is probably best not to confuse the model by annotating them. If they are clearly visible, not marking them might cause issues.

In the image below, since the passengers are actually outside of the limits of the bus, it is necessary to detect them as additional objects since they are not “protected” by the detection of the bus itself.

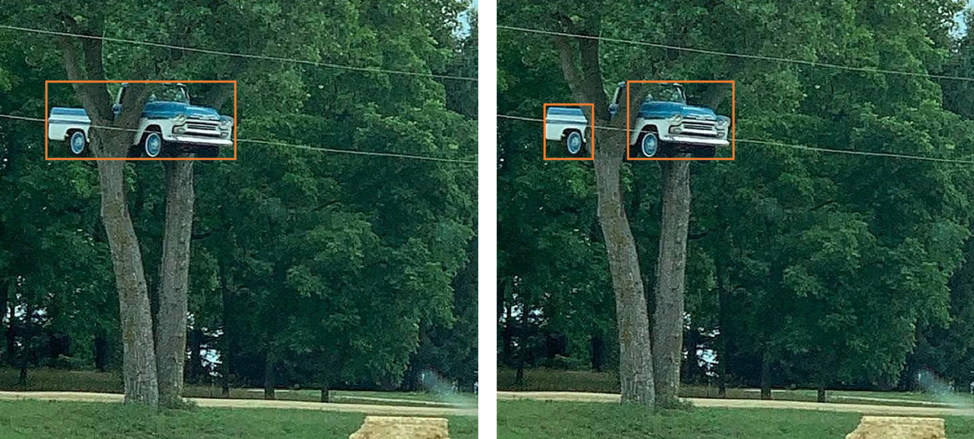

Dealing with Occlusion

How to annotate occluded objects is one of the most difficult decisions to make when preparing data for object detection. For us human beings, it is very easy to determine from the context whether or not two half-cars sticking out from behind a tree are the same vehicle or not; however, we cannot expect a standard CNN architecture to draw the same conclusion. That being said, it might not be a major issue if two parts of the same object are annotated as two separate objects, as long as they are annotated as the right type of object. As a rule of thumb, it is usually best to annotate the various non-occluded parts of an object A as being separate as we want to avoid the model to interpret the forefront object B as an integral part of object A.

As always, use good common sense as well as your knowledge of the model to make the optimal decision, and stick with the same guidelines throughout the entire dataset.

Consequences of Mistaking an Object for Another

While missing objects is probably the most common mistake in the case of object detection, it is still relatively common for annotators to pick the wrong class for an object. For example, one might mark a truck as a car, either by negligence or as a result of an ambiguous situation.

Marking a pickup truck as a truck instead of a car might feel like a big deal (and if it is in your case, then pay extra attention to making that clear when building your labeling instructions), but it might not be as problematic as you think.

In the meantime, mistaking a motorcycle for a bike might be a much bigger issue though.

Why the difference here? It all comes down to how the information is to be used downstream. A truck is, after all, only different from a car by its volume. If a truck were to be detected with the right volume, but with the wrong label, it would not represent a major issue in runtime: both cars and trucks are to be avoided by the autonomous vehicle that detects them. The only remaining challenge would be not to have the confusion between cars and trucks happen too frequently in the training dataset in order to avoid drops in model performance.

Bikes and motorcycles are a different story though, because while being both vehicles, they do not move at the same speed. An autonomous vehicle is configured to treat bikes as slow-moving objects that it expects to pass rapidly on the road. This is not the case of motorcycles. Understanding this kind of nuances might require a proper understanding of the functioning of the autonomous driving software beyond the perception layer.

This is the reason why annotators are often instructed to mark bike riders with a foot on the floor to be treated as pedestrians: as long as the bike is not in motion, the rider is actually behaving as a pedestrian and needs to be treated as such.

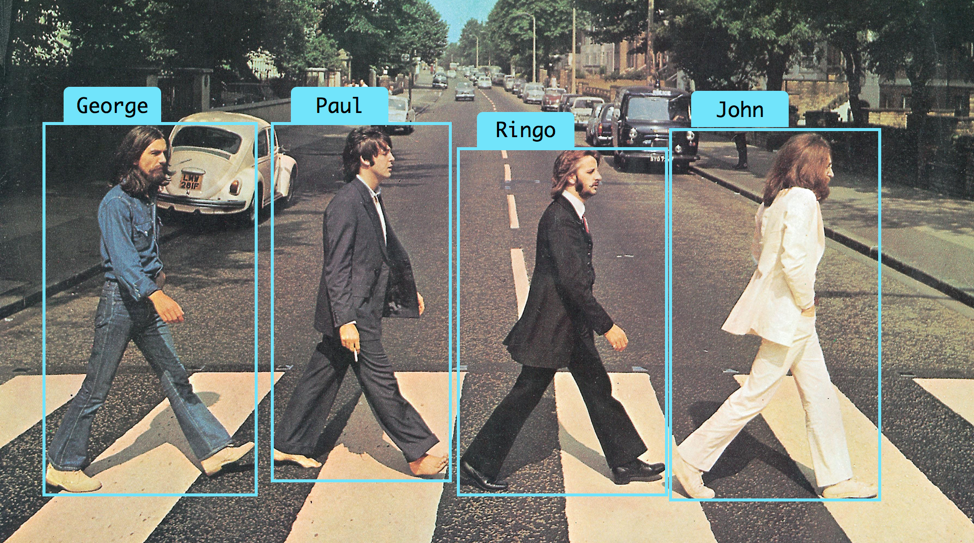

Consequences of Bounding Boxes being too Tight / too Loose

If you ever had to annotate data for object detection yourself, you must have wondered how tight to make your bounding boxes. Fitting boxes just right is actually not as easy as it seems. The general guidance is to make boxes as tight as possible while ensuring the entire object of interest fits inside. That’s because you want to capture the object in its entirety while reducing background noise.

So what happens when an object is laid off in a way that even with the tightest box possible, most pixels are still not part of the object? What about when different boxes overlap?

It might be tempting at times to find the best compromise between capturing the full object and avoiding catching too many unrelated objects in the background. Because it is important every part of an object is detected, it is best to stick to the first rule no matter what (we do not want an autonomous vehicle to smash into the mirrors of another vehicle!).

Of course, if the training dataset includes many examples causing the capture of a lot of background, this could reduce overall recall because the model might be expecting specific elements to be present in the background as an integral part of the object. For example, if too many objects were to include cars like for the George box below, the model could eventually miss pedestrians when they do not have a car in the background. If that is an issue with your data specifically, it might be a good idea to consider an instance segmentation approach instead. In any case, you need to ensure you are not sacrificing recall for precision, which is what you are doing when enforcing that the entire object fits inside the bounding box.

Mislabeling Tolerance: How Perfect Do Your Labels Need to Be?

No matter how hard you try, you just cannot have perfect annotations, whether or not the annotations are generated manually or with the help of an automatic labeling process. This is not only because of human error, but also because the annotation process is deeply non-deterministic.

With this in mind, it all comes down to finding out how imperfect you can afford the dataset to be. The good news is even though data quality is touted as the main requirement for a successful Machine Learning project, the model often offers more resilience against imperfect labels as most people assume. A preliminary study carried out by Alectio showed that while some models seem to require more than 95% labeling accuracy, others could afford a lot more noise without being too dramatically impacted. Under some circumstances, it pays off to compensate for a lower labeling accuracy with a larger volume of data, so it all comes down to whether it is a better option for you to have a higher labeling quality, or a larger volume of data.

Generally speaking, it is never a bad idea to start out with a smaller dataset to test out some labeling best practices and guidelines, and analyze how these impact the performance of the model. Then, once all weaknesses and issues have been identified, you will be able to scale your labeling process with peace of mind and better results in the end.

Best of luck with your next annotation project, and please keep in mind that failing to establish proper guidelines when annotating your Autonomous Driving dataset will almost certainly lead you to the results below!

0 Comments