At Alectio, Active Learning is one of our favorite topics: most of our Data Curation technology is built on top of it. In fact, we are on a mission to have all data scientists give it a try at least once, which is not trivial since Active Learning is not a traditional topic of Machine Learning curricula at universities.

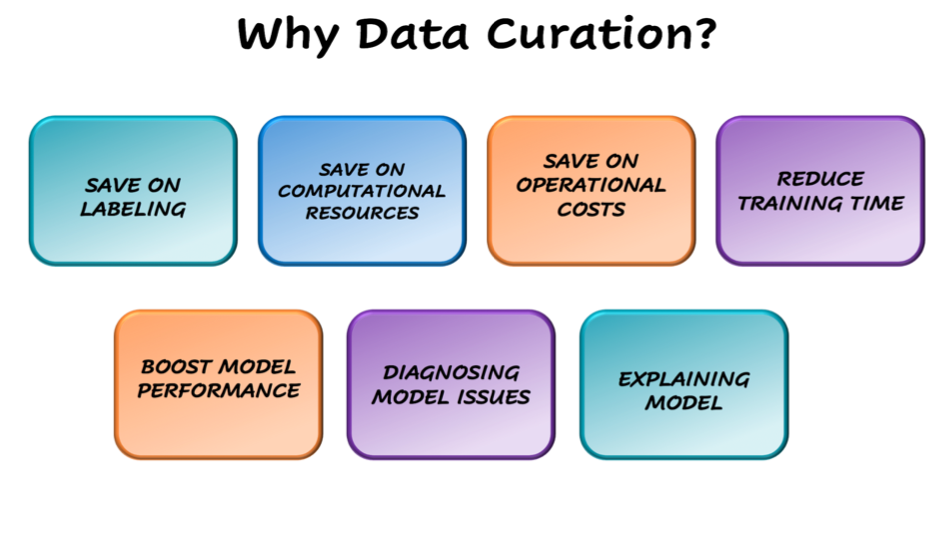

One of the reasons why Active Learning is so rarely adopted comes essentially from the fact that people view only one benefit to it: the ability to reduce the number of records to be annotated, and hence, the overall annotation budget. However, there are so many more ways in which Active Learning can benefit ML scientists. We will try to establish an exhaustive list of those benefits here in the hope of seeing you give Active Learning a try, too.

Saving Time and Money on Data Labeling

Active Learning was originally developed in order to avoid annotating the entire training dataset, which was particularly critical for academic researchers who did not have expansive budgets to annotate datasets, especially in cases where data modalities were expensive to annotate. The cost of data labeling is one of the main reasons why progress in Computer Vision was stalled for decades. It took Fei-Fei Li and her team to take upon themselves the initiative (and the cost) to annotate a new image dataset that they called ImageNet, which they made publicly available to allow researchers to develop new Deep Learning architectures to match, then eventually supercede the capability of humans when it comes to recognizing objects from pictures.

That said, when one works with their own custom yet large dataset, annotating the full dataset might simply be out of budget, which is why many organizations choose to sample (usually randomly) the training data that they send to labeling companies to be annotated. Rather than random sampling, Active Learning acts like a ranking engine that can order the training data from most useful to least useful, based on the feedback of the model. This approach not only helps reduce the amount of time and money spent on labeling, it actually acts on the root cause (which autolabeling -the practice of using a Machine Learning algorithm to generate annotation- and algorithms like Snorkel don’t do). Definitely a powerful tool to keep labeling budgets under control.

Getting Enough Training Data when Experts are Scarce

But selecting a subset of data to be annotated has another, though less advertised, advantage: it allows to develop models in fields where the data needs to be annotated by subject matter experts, like doctors (for x-ray data), dentists, translators or geophysicists. The largest labeling companies in the world are excellent at providing access to highly performant annotators for general use cases (such as autonomous driving or facial recognition, which do not require experts to manage the annotation process), but they rarely have people on staff capable of taking care of nice use cases. Oftentimes, when your data is very specific, you are on your own. This is how, for example, most oil companies hire geophysicists internally to annotate their data. In such a case, the number of records the Machine Learning team can get annotated within a specific period of time is humanly limited, and you have no choice but to be selective with the data you want to include. Here, again, Active Learning is the perfect solution.

Saving on Computational Costs

This is a tricky one, because Active Learning is traditionally perceived as being a computationally expensive technique. Why? Because it involves retraining the model sequentially with increasingly large fractions of the dataset. In fact, if you are not careful (for example, by failing to stop when the model stops improving, or by using loops of inappropriate sizes), you might end up saving money on labeling while exploding your AWS bill. In such a case, the user is essentially in a tradeoff situation.

But the research our team has done on Compute-Saving Active Learning has shown that done right, it is often possible to reduce the compute bill by almost the same amount as the labeling bill. We have written a full article on the topic that you can read here.

Reducing Model Training Time

Similarly, training a given model with Active Learning is often a lot more time consuming that simply using supervised learning, and not wasting training time involves a lot of expertise and care. However, Active Learning can still lead to speeding up very significantly the training process because of the time saved on data labeling. When annotating a large dataset of several hundreds of thousands of records, one can often see himself/herself wait for months. With Active Learning – and only a fraction of the data to annotate -, a Machine Learning team can often push the model to production a lot sooner. This is even more true when using continuous data annotation pipelines (such as those available through the Alectio Labeling Marketplace) instead of waiting for manual updates on the status of the labeling request.

Boosting Model Performance

This is where it gets really interesting. Until now, all the benefits we have covered were exclusively focused on operational benefits. But what if I told you that by using Active Learning, you could actually increase the performance of your model. This would certainly go against everything you had been taught in school: more data is supposed to lead to better generalization, and hence, better performance. However, in recent years, new research on the topic of Online Learning has demonstrated the possibility for models to get confused and “forget” concepts that they previously understood, in the same manner that students exposed only to difficult new vocabulary when learning a new language end up forgetting the initial, most simple words. By acting not just on which records, but also in which order the records are fed to the model, it is possible to significantly modify its performance. This is a very exciting area of research that I personally hope more experts will pay attention to over the next couple of years.

Diagnosing Model Issues and Weaknesses

Training a model incrementally might feel like a hustle at first (especially because it requires more complicated MLOps pipelines), but it comes with a unique advantage: the ability to track the learning process step-by-step, and record much more granular metrics. For example, with Active Learning, you get the opportunity to get a confusion matrix for each loop, and establish exactly when a specific class is being learned, or when overfitting starts occurring. It also allows to analyze the stability of parameters across time. Active Learning provides unprecedented abilities for a data scientist to analyze, for example, if two classes should be consolidated, or if a model is too deep or too narrow, making it a powerful diagnostic tool for data scientists.

Improving Model Explainability

Last but not least, by the same argument of incremental learning, Active Learning makes it possible to correlate the injection of specific data along the training process with fluctuations in the model and its learning curve. Because the selected data is annotated, it is possible to analyze how the density of information (like specific objects in the case of object detection or segmentation) changes over time, and how it correlates to changes in the pace of learning. For example, if the data runs out of trucks and the learning curve for cars stalls, it is possible not only to improve the data collection process (you could even generate the exact right synthetic data), but to gain a detailed understanding of what helps the model learn faster.

**********

Who knew that Active Learning could actually be such a powerful tool to help data scientists build and train their models faster and better? Well, we did, and that’s what we designed the Alectio Platform to do for you. But regardless of whether or not you want to make the Alectio Platform one of your preferred MLOps tools, we hope that you will give Active Learning a fair shot and explore its many benefits beyond the simple fact that it can help you keep labeling costs under control. We are certain that you will not regret it.

0 Comments